The latest buzz on the internet is a FRED chart published by the Federal Reserve of St. Louis of Jeremy Pigers’ dynamic factor Markov recession probability index. It currently jumped from less than 1% to 18%. Inferences are being made that recessions have always been underway or occurred very shortly after a reading of 18%. Suddenly the 18% probability went to 100% based on this inference. Why this inference is so ludicrous is also aptly described on Jeff Millers site. Most people publishing these wild 100% recession claims never even bothered to read or understand the academic papers used by the authors to explain the model. Some even went so far as to infer the FED endorses the model and in another case that Piger actually works for the FED. The mind boggles.

Piger and Chauvette are highly respected academics who pioneered the mainstream use of Dynamic Factor Markov models for US recession modelling and co-authored the papers explaining the methodology. By their own admissions, documented in the papers and in separate email communications with me last year, to have a reasonable degree of certainty that you are not witnessing a false positive (wrong signal) you need to make a recession call when at least 2 or 3 months have passed with a reading over 80%. I think this is too conservative and would look to 3 or more months over 50% before I started making inferences. According to Chauvette,

A fast assessment on the state of the economy can be obtained by observing whether the real time probability of recession has crossed the 50% threshold for a month. This rule maximizes the speed at which a turning point might be identified, but increases the chances of declaring a false positive.

This is because these models can have very volatile data because of revisions of the underlying data and the empirical fact that business cycle phases are persistent, which is explicitly modelled in the Markov-switching framework. What this means is that a bad month of data will be assigned an even higher probability that it is a recession month if the following month is also bad. Similarly, a bad month of data will be assigned an even lower probability of recession if the following months are less bad. So, as more bad or good data is revealed, this increases/decreases the probability that earlier bad months were recessions. So you have this “double re-visioning” effect.

Below is a chart of this effect on Pigers’ model, based on vintage data he sent me when I first suggested to him a while back that it would be nice to start tracking vintages. Clearly, some bad data came amount in the last summer swoon and probabilities climbed accordingly and then subsequent months provided better data and the prior months were revised down accordingly. The revision to the probabilities is big (from 4.8% to 1% for example is a major move)

Chauvette does not publish vintages for her model but I have a data file received from her in late 2011 for some research we were doing which I used to compare to her latest data set (smoothed probabilities).

Here we see that 6 months of over 25% readings never led to a recession. We also see how dramatic revisions occur from the “empirical persistence” built-into these models when August 2011 and Sept 2011 were downgraded from 28% odd to less than 5% This is not a revision, it is a complete “re-think”!

One final thing you need to consider with both Chauvette and Pigers’ DFMS models is that because of a two-month delay in the availability of the manufacturing and trade sales series, the probabilities of recession are also available only with a two-month delay. So the reading you see now applies to the economy as at August 2012. We’ve had one set of extra data readings since then that have not been incorporated into these models. They are shown below (readings on extreme right of below charts) together with a preferred metric we use for sales, namely the REAL RETAIL SALES figure from FRED which has a monthly frequency to give us more timely data. We can see that 3 of the 4 were up strongly in the last month. Clearly, Pigers model took fright due to the plunge in industrial production in August, but this has since rebounded smartly.

Both Chauvette and Piger maintain and update these Markov models separately and I have been following them diligently for 18 months. Despite referencing the same academic papers they co-authored, the two models maintained by these academics provide for different readings over time so they do not appear identical. Marcelle Chauvette informs me that the models are the the same but they are using a different series for employment, and a different estimation method.

Apart from the “revision effects” inherent in persistence models, I have discovered using probability indexes over time that small revisions changes in the underlying data can amplify recession probabilities significantly. Because probabilities are so “sensitive” (i.e. close to zero the one minute and then sky-rocketing the next) due to the mathematical methods/formulas used to determine them, you can have significant spikes in recession probabilities only to see them plunging again after a minor data revision. The revision may not look like much when you look at the underlying data but it amplifies the effect on the probabilities. For this reason we prefer to inspect the underlying index data to make inferences and the recession probabilities are more of an “interest factor” or another component into our overall inference.

It is clear that the amplification effect of revisions on recession probabilities coupled with the possible large “re-estimations” made to probabilities from the Markov models’ empirical persistence estimation characteristics, makes it ludicrous to jump to the conclusions many bloggers are currently coming to – namely that a reading of 18% has always only been seen during a recession or just before one and therefore the probability of recession is 100%. To cater for the volatility of the readings, some smoothing is applied to eliminate false positives. The probabilities posted on FRED are ‘smoothed probabilities’, that is, smoothed away from all past spikes that occur in real time. Real time probabilities are very noisy, and a little bump of 15%, 20% or even 50% does not mean much in terms of signaling recessions. If these types of inferences are going to be made, then they should rather be made against the backdrop of the real-time (un-revised) record of DFMS probabilities as depicted below. As you can see, making recession calls based on an observation of 20% will lead to many false calls. The real-time probabilities below look very different to the smoothed Piger probabilities published on FRED don’t they?

Look, our firm is no Pollyanna when it comes the the U.S economy and we are not denying there is useful and valid informational content in the DFMS models’ high readings for August. These academics are well respected and know what they are doing and have been running these models for some time now. But you have to make the correct interpretations and understand what you are looking at! The recovery is tepid, but it’s still a recovery by many, many measures. I would bet that if you asked these two people if we are in a recession now they would say no. For everyone out there whipping up a negative chart as vindication for the U.S being in recession, you can whip out 5 more positive charts in defence of expansion. We’ve done just this in January 2012 “U.S Recession – an opposing view”, in March (“This index says no recession“) and again in July (“Recession not imminent“). The perma-bears argue that the Fed’s aggressive easing staved off recession. This is a weak excuse for their missed calls – they seemed to have forgotten the first rule of the stock market : “Don’t Fight the Fed.” Factoring in monetary policy and its economic effects is par for the game in this business.

The costs of premature recession calling are just as high as the costs of calling it late. ECRI called recession with near certainly in August 2011 and in every one of the three summer swoons we had since 2009 there was one or another person, perma-bear or chart out there proclaiming 100% “imminent” recession. In the meantime the markets kept grinding higher. In fact, since August 2011 the SP500 has risen a staggering 27%, peaking at 31%. Initiating a market exit strategy based on ECRI’s recession call is now as costly as the average fall of the stock market during an economic recession. I can’t imagine what must be worse – sitting on the sidelines agonising in disbelief as the market keeps chugging higher 30% without you or sitting in a buy-and-hold through a 30% recession decline. At the end of the day the end result is the same, although the journeys are psychologically different in their pain infliction.

Our own research, “Recession: Just how much warning is useful anyway” indicates that a warning of 3-4 months before recession is optimal and any exit strategies before this are likely to be costly. Additionally, contrary to the popular belief peddled by the perma-bears, even if you only exit the market on the exact date or even a month after the recession starts, you will still do pretty good in avoiding the bulk of the corrective damage, and the advance lead-time of 3-4 months does not contribute to the main gains to be had from exiting early to avoid recession. Ultimately when you assess a market asset allocation strategy deploying recession forecasting you need to assess two things to measure success – how accurate was the call in pinpointing the exact dates and how close was the call to timing the market peak. For the stock market participant the 1st criterion is academic and the 2nd is all important. We discuss this in detail over here: Judging Recession Forecasting Accuracy.

Of course, when things are as sluggish as they are the economy is even more subject to external shocks plunging it into recession. Most recession forecasting models do not quantify these external risks. There are many of these external risks, but not much more than there were external risks in the last summer swoon and the summer swoon before that, and the one before that. The two major external risks to the US economy now are the looming Fiscal cliff and a deepening Global Recession (see “On the brink of Global Recession“).

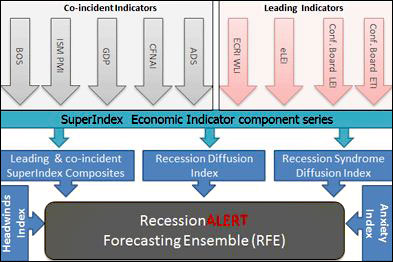

As with any complicated topic or discipline, one cannot rely on singular models for recession forecasting. We prefer to deploy a battery of diversified, robust and proven recession models when attempting to assess the risks of recession, with our Recession Forecasting Ensemble.

Outside of this we also diligently track the 4 co-incident indicators used by the National Buro for Economic Research in determining recessions, with “The NBER co-incident recession model – confirmation of last resort“. We are also about 75% of the way through research showing the resilience of this model in the face of revisions (Effects of revisions on the NBER recession model).

The latest report that we provide subscribers each month on this “NBER Model” is attached below for your interest. It confers we have a tepid recovery printing new low-watermarks for expansion and that recession risks have risen to around 6.7%. The chart below showing the models’ US economic growth metric is enough to make anyone nervous. But we argue this latest slump is the result of many leading economic indicators rolling over 5-6 months ago. These leading indicators are all now on upward trajectories for the last 3 months. Our guess (and its just that – a guess) is that the leading indicators’ trajectories are going to pull the co-incident data up again – barring an external shock or Congress screwing up the Fiscal cliff issue of course!

The latest NBER Recession Model Report we publish for clients each month is available below as at end October 2012. This is not a model based on persistence meaning we only have revisions of underlying data to consider. We do not “revise away” spikes in the past as with DFMS models. Therefore readings in the “now” can be better compared with readings in the past on any historical chart. This is not to say this is the only approach, or the best approach but it does say that an approach of combining many different models is probably good sense. Click on the icon below to download the PDF report for the NBER Model.

Source: Recession Alert